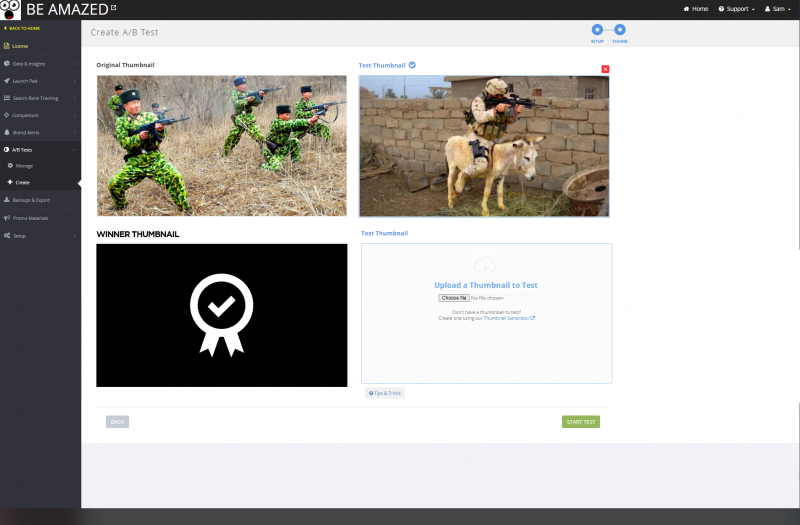

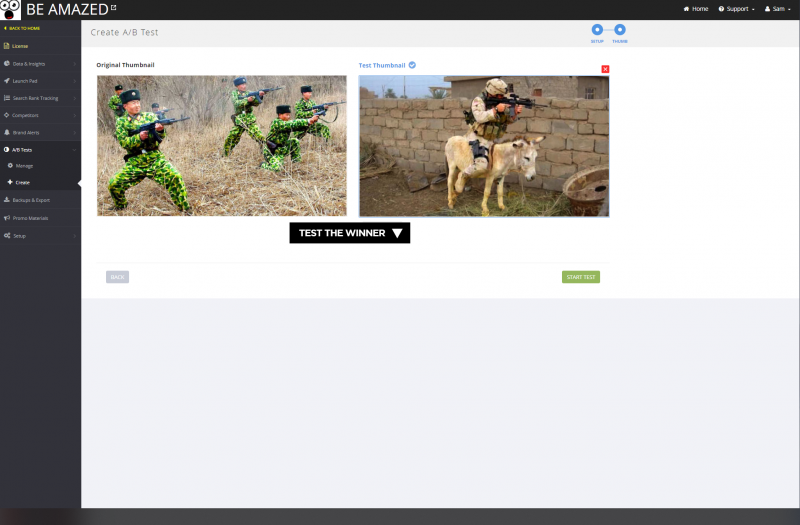

Currently, it takes a long time preparing and analysing tests of different titles/thumbnails that I come up with for each video. Lets say I come up with 10 different thumbnails, and 10 different titles for a video (its an extreme example, but just run with it). With the current version of tubebuddy, you have to test each thumbnail and each title one-by-one to see which works best. That means logging on after each test is complete, analysing the results, then setting the best version, and then setting a new A/B test. This takes a lot of time for us to manually do. It would be a lot easier if you could create a system by which a TubeBuddy user could upload multiple thumbnails all at once, and let TubeBuddy test all possible combinations to see which one is the best over time. It would also be great if you could do this with titles. So, for example, thumbnail-1+title-1 tested against thumbnail-2+title-2 against thumbnail-3+title-3 against thumbnail-4+title-4 etc. All done automatically so that instead of just being able to test one thumbnail change or one title change at a time, TubeBuddy could queue up each tests, so that it could work its way down the system. Lets say the user chooses 'statistical significance', then once each winning thumbnail, or thumbnail+title combination thats paired against each other comes out on top, its automatically tested against another inputted version in the queue. This way when the whole test is complete TubeBuddy could produce a ranking of the best/worst A/B tests provided. It would save a lot of time manually testing lots of versions of thumbnails and titles.

TubeBuddy Suggestion Automatic Bulk Thumbnail / Title A/B Tester

- Thread starter TBfan12

- Start date